Exploring the integration of nearshore staffing solutions, such as those provided by Contollo, with Azure Stream Analytics uncovers a dynamic approach to data management. By combining the agility of nearshore talent with the power of real-time analytics, businesses can swiftly adapt to data-driven challenges. This article delves into the synergy between Contollo’s nearshore staffing expertise and Azure’s analytical capabilities, equipping you with insights into their combined impact on streamlining data processes and enhancing proactive decision-making.

Key Takeaways

Azure Stream Analytics is a key tool enabling real-time data analysis, driving faster decision-making and operational efficiency by leveraging the SQL-like Stream Analytics Query Language.

Azure Data Factory complements real-time analytics by managing less time-sensitive data through orchestrated, automated, and cost-effective data movement and transformation processes.

The evolution of business intelligence tools enhances capabilities for predictive and prescriptive analytics, empowering organizations with self-service and real-time analytics, while nearshore staffing solutions offer access to skilled data professionals, cost savings, and efficient collaboration.

Real-Time Data Analysis with Azure Stream Analytics and Beyond

However, the landscape of real-time data analysis is vast and sometimes requires different approaches. For scenarios where existing applications have extensive business logic that can be leveraged, a service-oriented architecture (SOA) with web services may be more appropriate. This technique allows for the integration and orchestration of existing services to enrich and process data in real-time, capitalizing on the established business logic and providing a seamless blend with current systems.

Contollo, a nearshore staffing agency, specializes in providing talent skilled in both Azure Stream Analytics and SOA with web services. Their professionals are equipped to determine the most effective real-time data analysis technique for each unique business context, ensuring that the chosen solution aligns with the project’s goals and existing infrastructure.

Integration and Synergy

The integration of Azure Stream Analytics with other tools and services can amplify its capabilities. Contollo, as a nearshore staffing agency, plays a crucial role in this process by delivering experts who can seamlessly integrate Azure Stream Analytics with existing web services through a service-oriented architecture. This integration facilitates automated deployment, identity and access management, analytics, and the utilization of established business logic.

Contollo harnesses the power of Azure’s Event Hubs and Monitor for the ingestion of streaming data, providing a comprehensive view of infrastructure and applications for real-time analytics and monitoring. They scale efficiently using Azure services such as Azure Databricks or Azure Synapse Analytics to accommodate varying data workloads. Furthermore, they ensure data integrity by utilizing Azure’s monitoring and logging tools like Azure Monitor and Azure Log Analytics.

By combining Contollo’s nearshore staffing expertise with Azure services, a robust platform for real-time data analysis is created, enhancing business processes and operations while offering the flexibility to incorporate alternative approaches such as SOA with web services when they better serve the project’s needs.

Maximizing Business Potential with Real-Time Analytics

By employing techniques such as anomaly detection and behavioral analysis, these real-time analytics solutions play a pivotal role in operational integrity and continuity. The reduction in financial and reputational damage from fraud is a testament to the power of real-time analytics. With the flexibility offered by Contollo’s nearshore staffing solutions, businesses can select the optimal approach to real-time analytics, revolutionizing how they operate and compete in the fast-paced digital landscape.

Identifying Critical Data Streams

Within the scope of real-time analytics, data streams differ in their importance. Businesses need to prioritize data streams based on their criticality to operations. For instance, a data stream related to security threats would have a higher priority than a data stream tracking website visits.

Other factors such as the volume and velocity of data also play a crucial role in determining the necessity for real-time stream analytics. Additionally, the complexity of the required analysis and the resources and cost involved should also be assessed when deciding on deploying real-time analytics. By identifying critical data streams, businesses can focus their resources on the most impactful data, maximizing the value derived from their real-time analytics efforts.

Transforming Operations with Instant Insights

Consider a fleet management company predicting equipment failures before they happen, or a logistics company optimizing routes in real-time, guided by live shipment data, traffic conditions, and weather updates. These are not futuristic scenarios, but real-world applications of real-time analytics. By leveraging the asynchronous programming capabilities of .NET Core and the power of streaming analytics, Contollo.net allows for independent, responsive operations and offers instant insights to enable informed decisions in industries where continuous operation is critical.

Real-time analytics are also transforming the transportation industry by contributing to more efficient route optimization. Fleet management and driver safety are significantly improved through predictive maintenance and accident prevention, made possible by the real-time analysis of operation-critical data. The power of instant insights is revolutionizing industries, enabling more efficient operations and better decision-making.

Use Cases and Success Stories

The applications of real-time analytics are far-reaching, spanning various sectors and industries. From healthcare to logistics and public safety, real-time data streams are being harnessed for diverse applications such as fraud detection, healthcare monitoring, predictive maintenance, and personalization in e-commerce.

In healthcare, real-time analytics is a game-changer. By monitoring patient vitals in real-time, it provides instant alerts on critical condition changes, facilitating timely interventions and improving patient outcomes. In logistics, traffic management and route optimization use real-time analytics for more efficient supply chains, timely deliveries, and fuel cost reduction. These success stories highlight the transformative power of real-time analytics, demonstrating its potential to drive innovation and enhance operations across different sectors.

Periodic Data Refresh Strategies with Azure Data Factory

Although real-time analytics revolutionizes data management, it isn’t always the optimal solution for every task. Enter Azure Data Factory, a cloud-based data integration service that orchestrates and automates the movement and transformation of data, connecting to numerous cloud and on-premises data stores.

Azure Data Factory is particularly useful for handling data that doesn’t need real-time analysis. For example, reference data, which changes infrequently, can be regularly refreshed and moved to Azure blob storage with the correct datetime information using Azure Data Factory pipelines.

To understand the operational differences between Azure Data Factory and Azure Stream Analytics, consider the analogy of filling a swimming pool with water. Using a bucket (i.e., Azure Data Factory) is suitable for filling the pool over time, while using a garden hose (i.e., Azure Stream Analytics) is more suitable for maintaining the water level in real-time.

Handling Historical and Batch Data

Real-time analytics vitalizes live data, while historical data, rich with valuable insights, guide future decisions. Azure Data Factory offers a serverless and elastic data integration service allowing efficient management of less time-sensitive data that requires batching or complex transformations.

The types of data suitable for Azure Data Factory include historical data, large batch data, and data that demands complex transformations, contrasting with the immediate processing of Azure Stream Analytics. As a result, Azure Data Factory is a cost-effective solution due to its serverless architecture which enables on-demand resource allocation per operation. Thus, organizations can leverage Azure Data Factory to glean insights from their historical data while managing their resources efficiently.

Cost-Effective Data Management

Data management can be a costly affair, especially when dealing with large volumes of data. However, Azure Data Factory offers an economical solution. It automates data movement on a defined schedule, providing an economical solution for handling large volumes of data that do not require real-time analytics.

Cost forecasting is another advantage of Azure Data Factory. Using its pricing calculator allows for cost estimation before adding resources, accounting for the number of activity runs and data integration unit hours. Detailed billing can also be enabled to provide cost breakdowns at the pipeline level within Azure Data Factory, enhancing the understanding of individual costs.

Additionally, cost monitoring in Azure Data Factory is available at various levels, ensuring detailed cost control. These features make Azure Data Factory a cost-effective solution for data management.

Strategic Data Pipeline Design

Data pipeline design extends beyond merely moving data from one point to another. It requires strategic planning and careful consideration of various factors. Best practices for designing data pipelines in Azure include:

Ensuring scalability and elasticity

Implementing data security and compliance

Creating modular pipelines for ease of maintenance and reusability

Setting up robust monitoring and logging

Effective data pipeline design in Azure Data Factory entails the following steps:

Identify data sources

Transform data

Move data efficiently using partitioning strategies

Load data into the chosen destination

Additionally, budgets and alerts can be configured to manage costs effectively, ensuring that resources are allocated efficiently and economically.

By following these best practices and working closely with data engineers, businesses can design efficient and effective data pipelines that meet their data management needs.

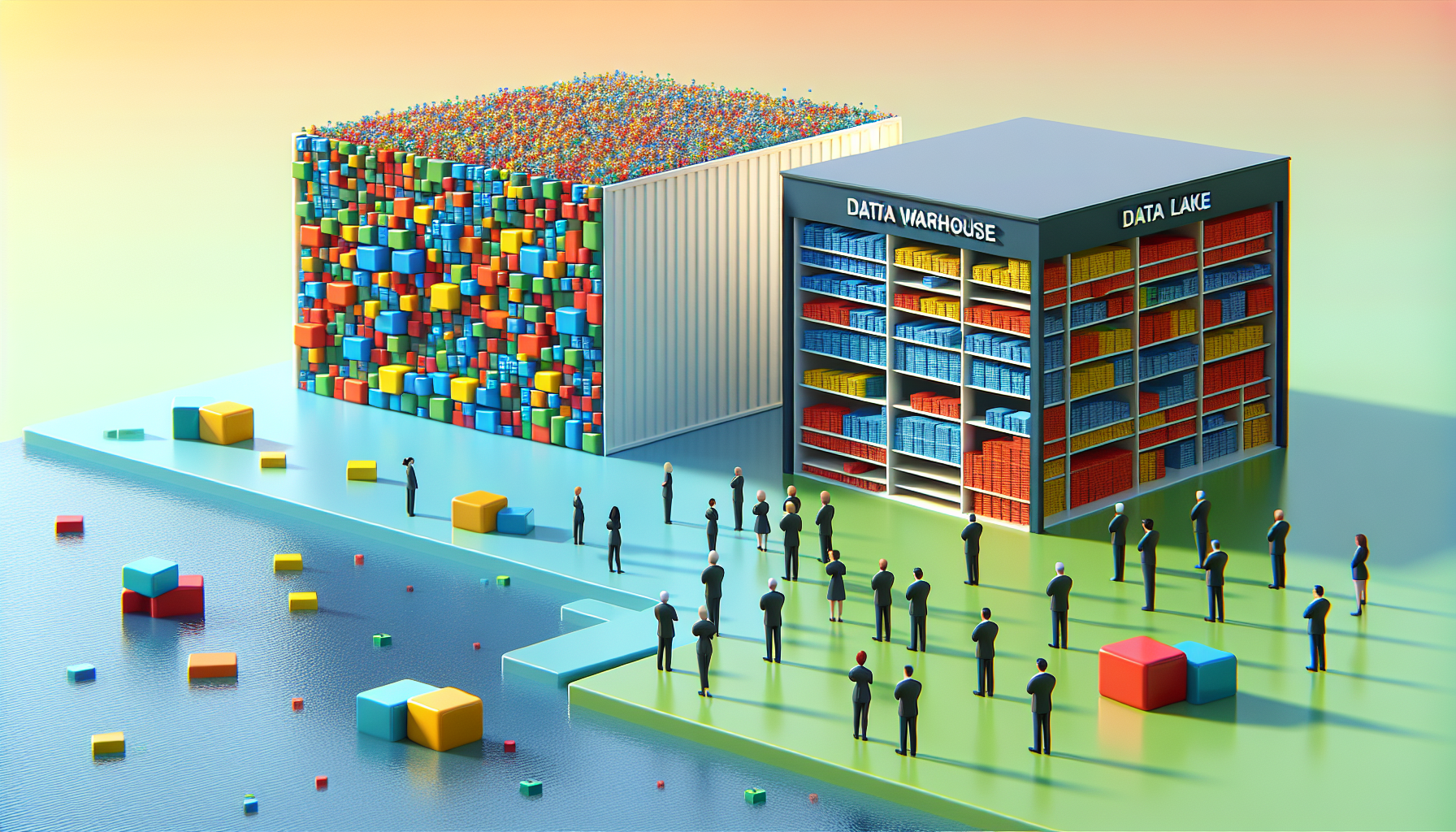

Navigating the Data Landscape: From Lakes to Warehouses

Two terms frequently encountered in data management are data lakes and data warehouses. Understanding the difference between these two is crucial for businesses looking to make the most of their data. Data lakes store raw, unstructured data, serving as large-scale storage repositories and enabling big data analytics; data warehouses, on the other hand, are designed for structured, processed data and focus on data analysis to find insights in historical data.

However, the choice between a data lake and a data warehouse isn’t a binary one. Modern data warehouses integrate multiple components aligned with industry best practices and are specifically engineered to offer streamlined data workflows. They contain historical data optimized for analysis, while Online Transaction Processing (OLTP) systems manage real-time transaction data. The architecture of a data warehouse is determined by an organization’s specific needs and is customizable to address unique business challenges.

Navigating the data landscape requires a clear understanding of these concepts and a strategic approach to choosing the right solution.

Understanding Data Lakes vs. Data Warehouses

There’s no one-size-fits-all approach to storing and managing data. Businesses need to understand the key differences between data lakes and data warehouses to choose the right solution. Data lakes are centralized repositories capable of storing a vast amount of raw data in its native format, including structured, semi-structured, and unstructured data, using a schema-on-read approach. On the other hand, data warehouses are relational in nature, designed for structured data, and use a schema-on-write approach to optimize for efficient SQL queries and analysis.

While data lakes are more scalable and cost-effective due to their ability to handle large volumes and types of data without predefined schema constraints, data warehouses provide a structured environment that simplifies querying and analysis. As a result, business users often find data warehouses more suitable for their needs, making them a preferred choice for standardized business intelligence tasks and operational reporting.

Understanding these differences can guide organizations to choose the right data storage solution that aligns with their business needs and objectives.

The Transition to Cloud-Based Storage Solutions

Advancements in technology are driving businesses towards cloud-based solutions for their data storage needs. Cloud data warehouses offer several benefits, including:

Cost-effective pay-as-you-go pricing model, providing significant savings compared to maintaining on-premises data warehouses

Scalability on demand, allowing businesses to adjust resource allocation to meet changing demands

Ability to handle fluctuations such as seasonal demand spikes

These features make a cloud data warehouse an attractive option for businesses looking to optimize their data storage and management in cloud data warehouses.

The performance of cloud data warehouses can surpass that of traditional on-premises solutions thanks to the cloud providers’ ability to dynamically deploy additional resources. Moreover, they provide robust security measures, high system availability, and reliable disaster recovery methods, including regular data backups without the need for businesses to invest in additional backup hardware. Therefore, transitioning to cloud-based storage solutions can enhance data storage and management practices, offering numerous benefits including cost savings, scalability, performance, and security.

Crafting a Comprehensive Data Strategy

Success in data management initiatives relies heavily on formulating a comprehensive data strategy. A well-designed data strategy aligns with an organization’s business objectives and guides its data management journey. Data architecture and technology selection should be driven by how well they meet the business’s requirements of:

relevance

accessibility

performance

integration with the current data lifecycle.

A data strategy roadmap is pivotal, detailing the steps necessary to move from the current to the desired future state, while accounting for staff availability, budget constraints, and other ongoing projects. This roadmap ensures that the organization’s data management initiatives are aligned with its strategic goals and objectives. It also provides a clear direction for the organization’s data management journey, ensuring a successful transition from the current state to a data-driven future.

Leveraging Advanced Data Analytics for Digital Excellence

In the digital era, the key to business success lies in harnessing advanced data analytics. Data science employs tools, methods, and technology to derive meaningful insights from data, thereby revolutionizing company operations and maintaining a competitive edge. Various data analytics methods such as descriptive analysis, to identify trends and patterns, and data mining, to sort data into categories, empower organizations to understand and utilize their data. With the help of data scientists, businesses can effectively analyze and interpret complex data sets.

Prescriptive analytics and clustering are integral to data science, suggesting the best course of actions and grouping related data to unearth patterns and anomalies which reveal underlying business problems. While statistics focuses on the quantitative aspect of data, data science is inherently multidisciplinary, employing scientific methods to extract knowledge from diverse data forms. By building an effective data analytics team, organizations can ensure that new technologies and processes are adopted and used efficiently.

The Intersection of Machine Learning and Streaming Data

The magic unfolds at the intersection of machine learning and streaming data. Machine learning models are capable of recognizing complex patterns and predicting future trends more accurately using real-time data streams, thus enhancing decision-making processes. However, integrating streaming analytics with machine learning presents challenges such as ensuring scalability, maintaining data quality, and achieving infrastructure compatibility.

Despite these challenges, the integration of machine learning and streaming data powers numerous use cases, including:

Risk analysis

Predictive maintenance

Fraud detection

Dynamic pricing

This demonstrates its versatility in different sectors like finance, manufacturing, and retail. This potent combination of machine learning and streaming data is revolutionizing businesses, opening new avenues for innovation and growth.

Empowering Business Analysts with Actionable Insights

Business analytics forms the crucial link between data and decision-making. It involves the practice of using data, statistical analysis, and predictive modeling to inform strategic and operational decisions. Data analysts leverage business analytics to transform raw data into meaningful insights, guiding tactical and strategic initiatives.

Business analytics helps organizations make more informed decisions, optimize processes, identify opportunities, and mitigate risks. By understanding customer behavior and preferences through analytics, businesses can tailor their products and services, improving customer satisfaction and loyalty. Furthermore, analytics enables organizations to gain a competitive edge by uncovering hidden opportunities and understanding market trends and customer preferences.

The key components of business analytics include data, analysis, and insights, each playing a pivotal role in deriving actionable knowledge.

The Evolution of Business Intelligence Tools

The evolution of business intelligence tools has been remarkable. From manual analytics processes, they have evolved to advanced BI tools capable of:

Real-time descriptive, predictive, and prescriptive analytics

Embedded analytics

Artificial intelligence capabilities

Low-code/no-code development environments

These features have enhanced business intelligence workflows through custom software development.

Self-service BI tools have gained popularity, enabling users without technical expertise to autonomously create and interpret reports, reducing the dependency on IT departments. Furthermore, the integration of real-time analytics with visualization tools like Power BI has allowed for dynamic reporting and dashboarding, facilitating immediate monitoring and decision-making.

The evolution of business intelligence tools has empowered businesses to harness their data more effectively, driving informed decisions and business success.

Where does nearshore fit in?

Securing the right talent is pivotal in the realm of data management. Nearshore staffing provides access to a diverse pool of skilled professionals with expertise in various domains, including data science, data engineering, software development, and more. More than just access to skilled talent, nearshore staffing offers cost advantages compared to traditional onshore hiring models. By leveraging talent from nearby regions with lower labor costs, organizations can reduce overhead expenses associated with recruiting, training, and retaining skilled professionals.

Nearshore teams offer several advantages:

Close geographical proximity to clients, often sharing similar time zones or minimal time differences

Seamless communication, collaboration, and real-time interaction between internal teams and external partners

Enhanced efficiency and effectiveness of data management workflows

Cultural and language similarities with clients, fostering stronger relationships and communication channels

Finally, partnering with a nearshore software development company offers staffing scalability and flexibility to adapt to changing business needs and project requirements, ensuring that organizations have the right resources in place to meet evolving data management challenges effectively.

Summary

We’ve journeyed through the world of modern data management, exploring the intricacies of real-time data analytics, the benefits of Azure Stream Analytics, and the strategies for periodic data refresh with Azure Data Factory. We’ve navigated the data landscape, understanding the differences between data lakes and data warehouses, and the strategic design of data pipelines. We’ve also seen the value of nearshore staffing in successful data management projects.

As we conclude, it’s clear that effective data management is a multifaceted process that requires strategic planning, the right tools and technologies, and a capable team. By leveraging advanced data analytics, businesses can transform their data into actionable insights, driving business success and maintaining a competitive edge. The future of data management is exciting, and with the right approach, businesses can harness the power of their data to drive digital excellence.

Frequently Asked Questions

What is a nearshore software development company?

A nearshore software development company is a company that provides outsourcing of software development work to a neighboring country, such as Latin America or North America for companies in the U.S.

What is the difference between nearshore and offshore development?

The main difference between nearshore and offshore development is the location of the development partner, with offshore companies being in a different country with a different time zone, while nearshore companies are usually in a neighboring country.

What Power BI is used for?

Power BI is a business analytics solution used for visualizing data, sharing insights, and embedding them in apps or websites. It is commonly used by business professionals and analysts for data visualization and reporting.

What is the difference between Azure Stream Analytics and Azure Data Factory?

The main difference between Azure Stream Analytics and Azure Data Factory is that Stream Analytics performs real-time data analysis as it is generated, whereas Data Factory focuses on data integration, copying, and transformation for storage or further analysis.

How can real-time analytics improve business operations?

Real-time analytics can improve business operations by providing instant insights, which enable prompt responses to events as they happen, particularly helpful in areas like fraud detection.